vocab.txt · nvidia/megatron-bert-cased-345m at main

Por um escritor misterioso

Descrição

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

The Controversy Behind Microsoft-NVIDIA's Megatron-Turing Scale

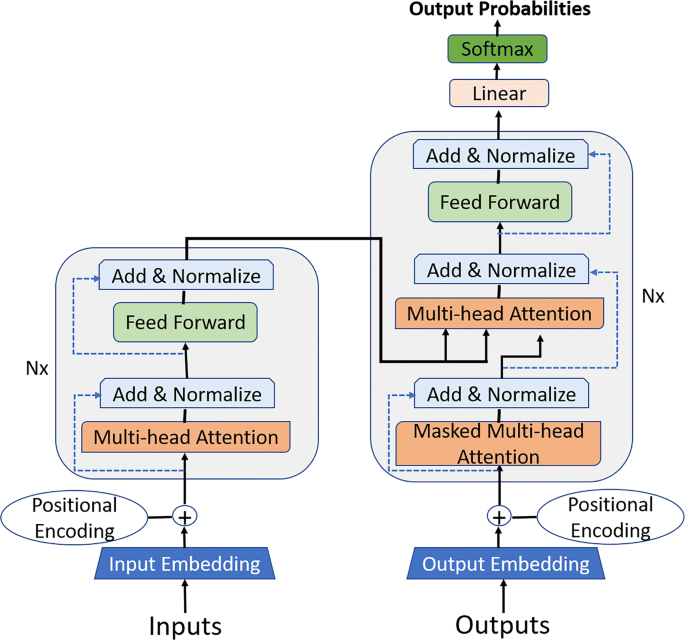

A Survey on Efficient Training of Transformers – arXiv Vanity

Nvidia Shaves up to 30% off Large Language Model Training Times - The New Stack

BioMegatron345m-biovocab-30k-cased

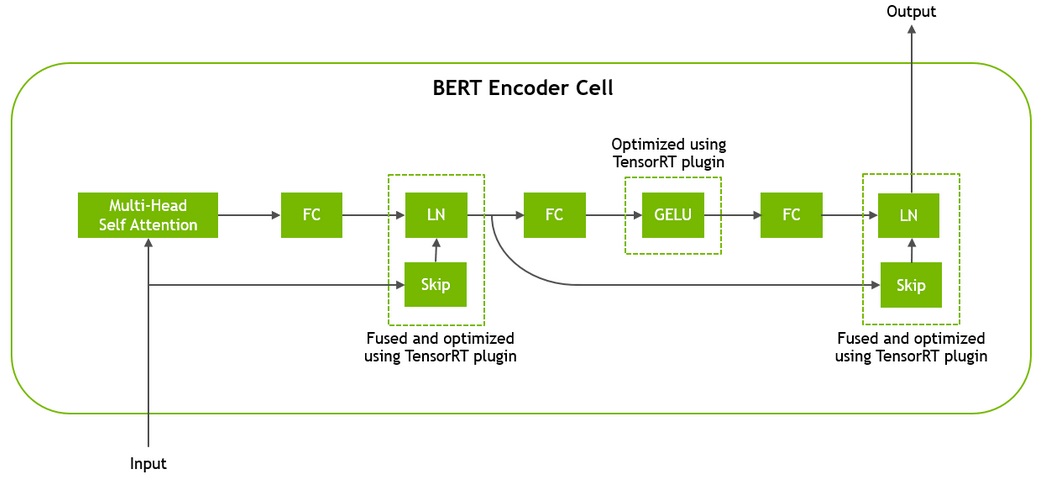

Real-Time Natural Language Understanding with BERT Using TensorRT

PDF) Foundation Models for Natural Language Processing: Pre-trained Language Models Integrating Media

Megatron-DeepSpeed/ at main · microsoft/Megatron-DeepSpeed · GitHub

AI, Free Full-Text

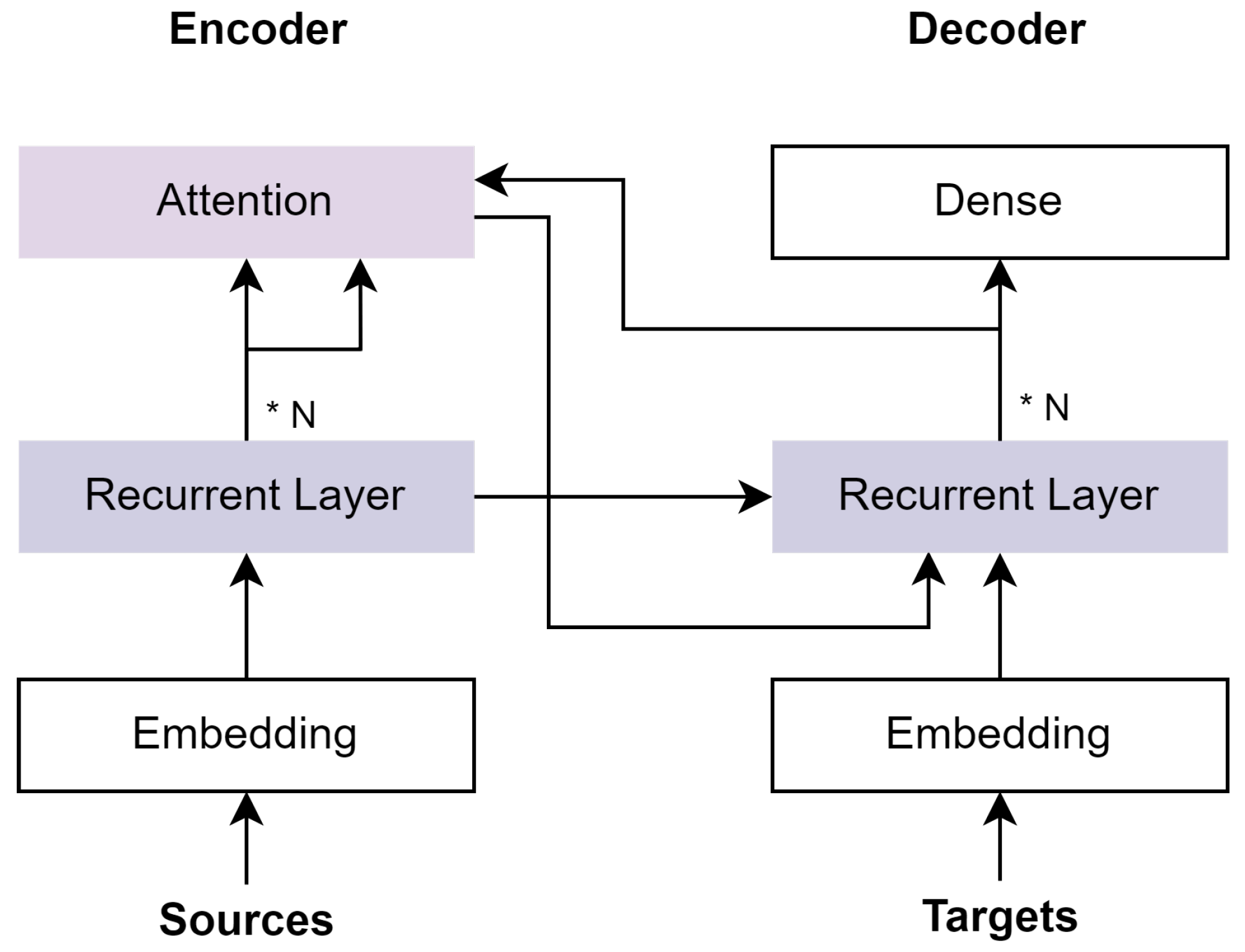

zhangy03/Hybrid-Parallel-Transformer-pytorch: 基于transformer架构包含中英文的bert、gpt、T5模型的算法库 - README.md at master - Hybrid-Parallel-Transformer-pytorch - OpenI - 启智AI开源社区提供普惠算力!

Real-Time Natural Language Understanding with BERT Using TensorRT

PDF) A large language model for electronic health records

Transformer models used for text-based question answering systems

de

por adulto (o preço varia de acordo com o tamanho do grupo)