A Coefficient of Agreement for Nominal Scales - Jacob Cohen, 1960

Por um escritor misterioso

Descrição

Youth: a social force?

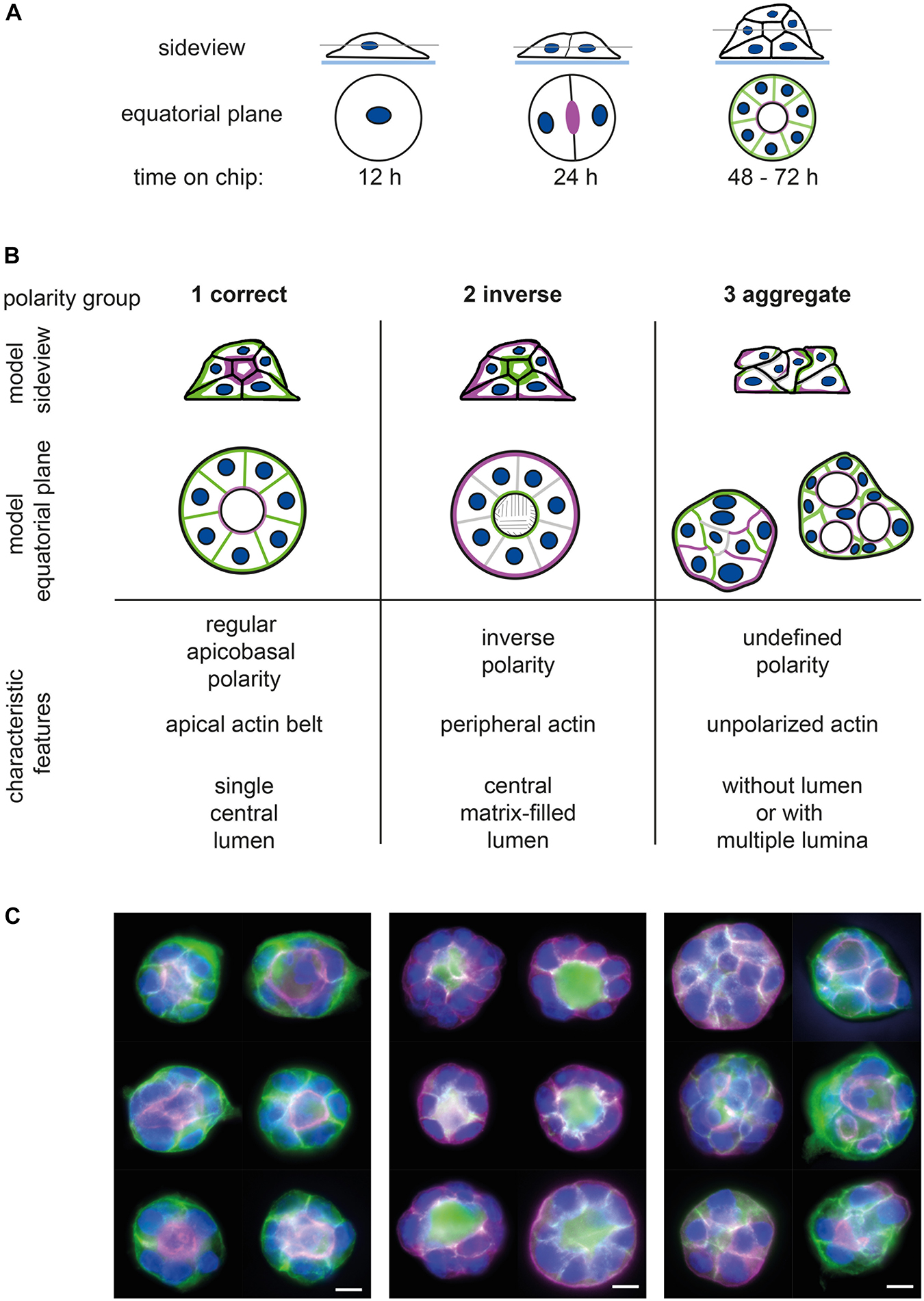

Frontiers Application and Comparison of Supervised Learning Strategies to Classify Polarity of Epithelial Cell Spheroids in 3D Culture

PDF] Can One Use Cohen's Kappa to Examine Disagreement?

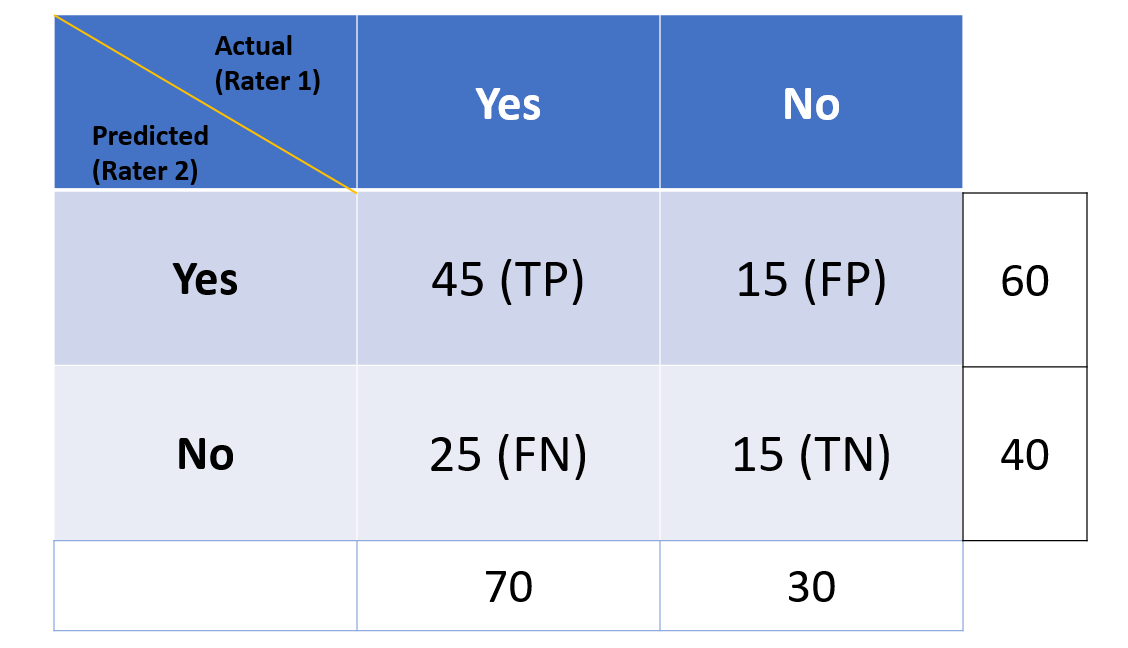

Cohen Kappa Score Python Example: Machine Learning - Analytics Yogi

AES E-Library » Complete Journal: Volume 42 Issue 11

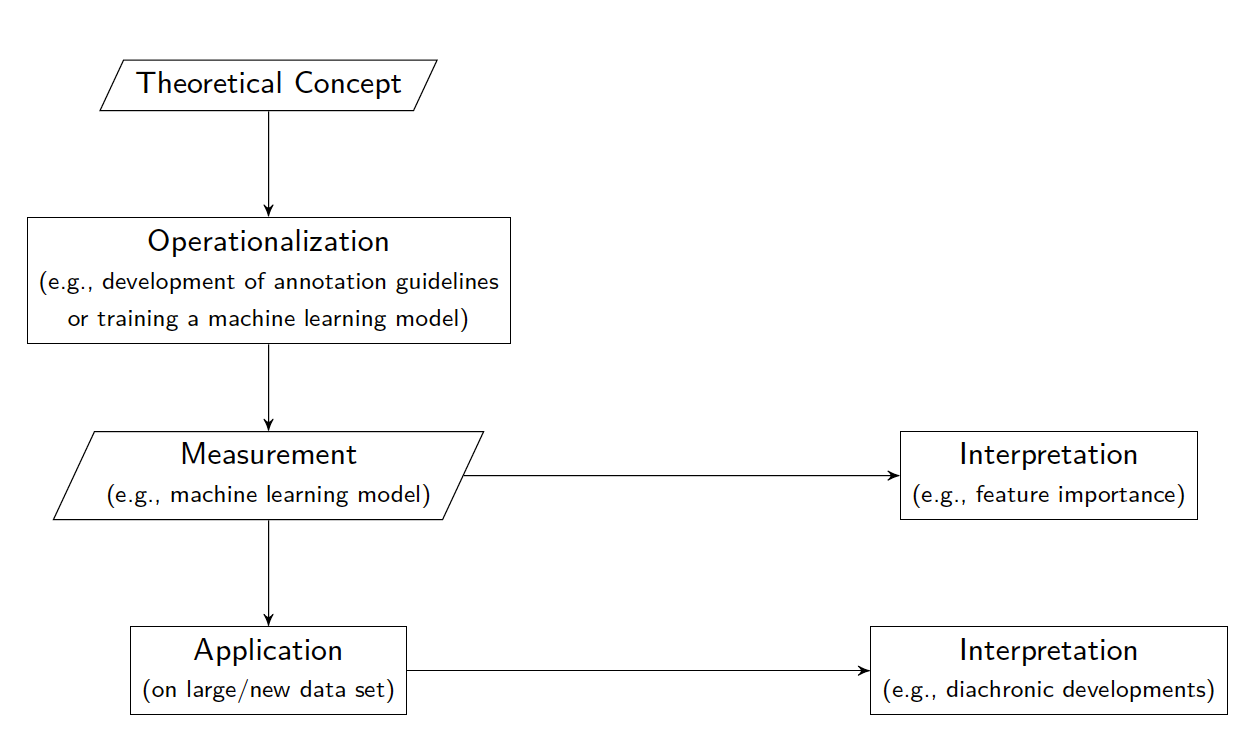

From Concepts to Texts and Back: Operationalization as a Core Activity of Digital Humanities

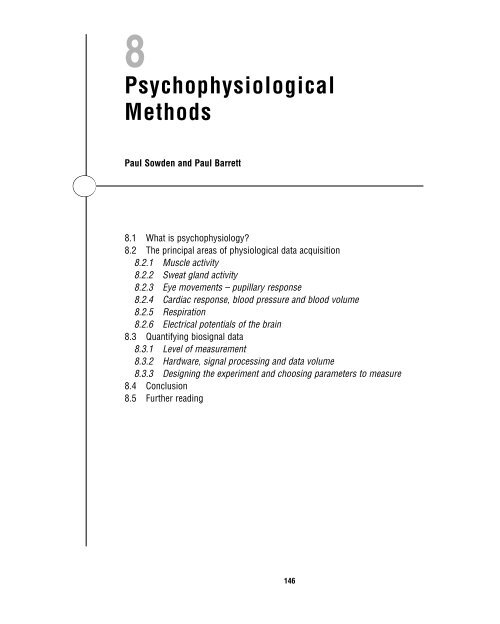

Psychophysiological Methods - Paul Barrett

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

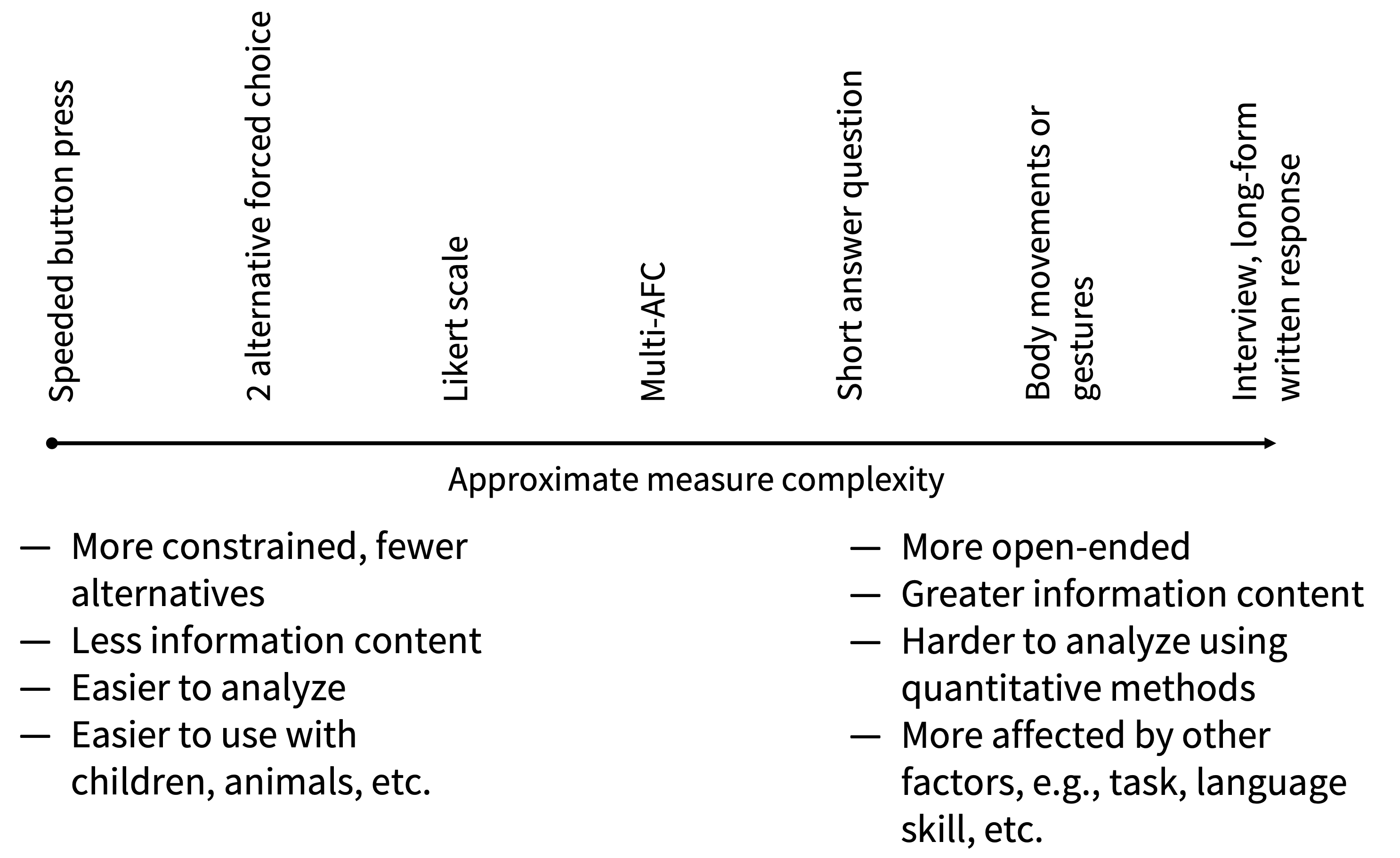

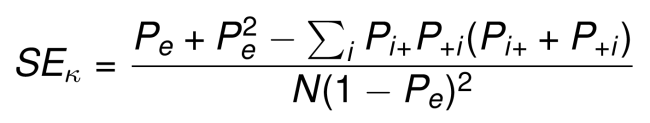

Experimentology - 8 Measurement

Cohen-1960-A Coefficient of Agreement For Nominal Scales, PDF, Measurement

PDF] A coefficient of agreement as a measure of thematic classification accuracy.

Cohen's Kappa in R: Best Reference - Datanovia

Ask Your Neurons: A Deep Learning Approach to Visual Question Answering – arXiv Vanity

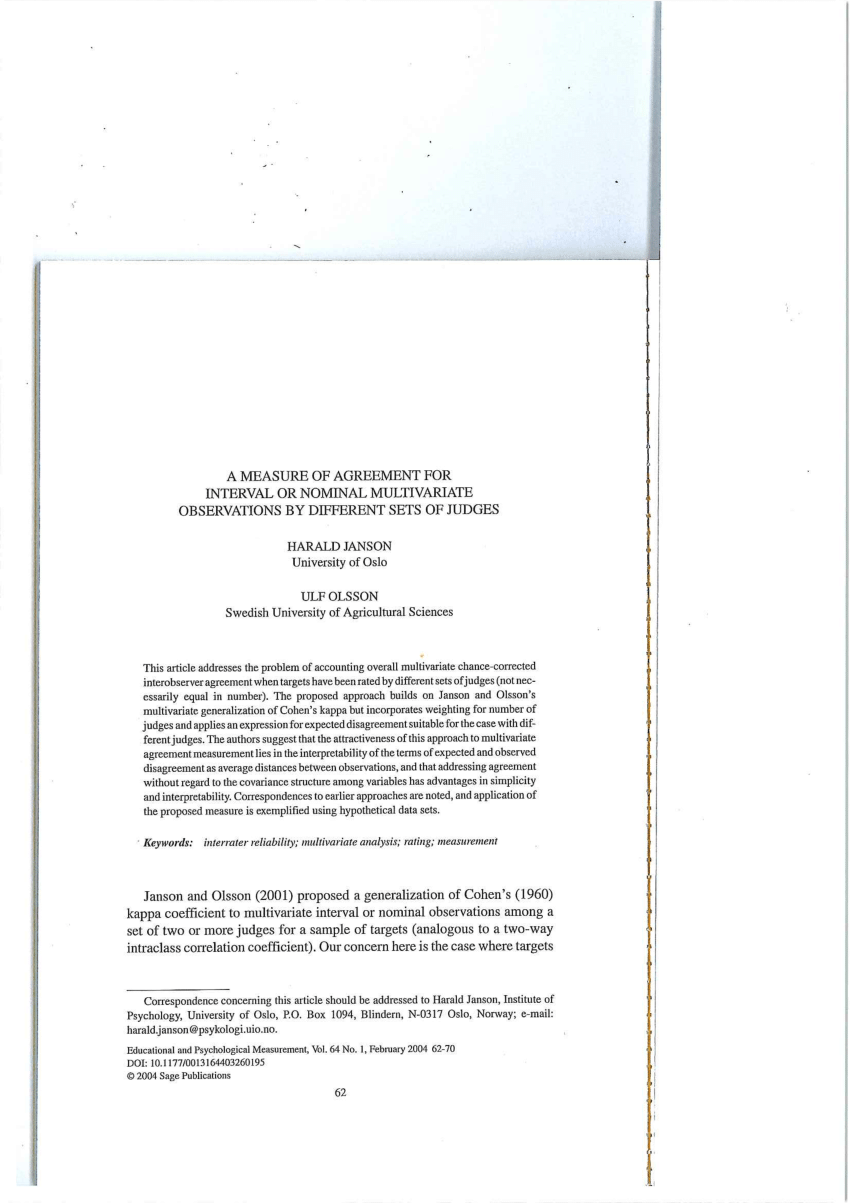

PDF) A Measure of Agreement for Interval or Nominal Multivariate Observations by Different Sets of Judges

de

por adulto (o preço varia de acordo com o tamanho do grupo)