No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

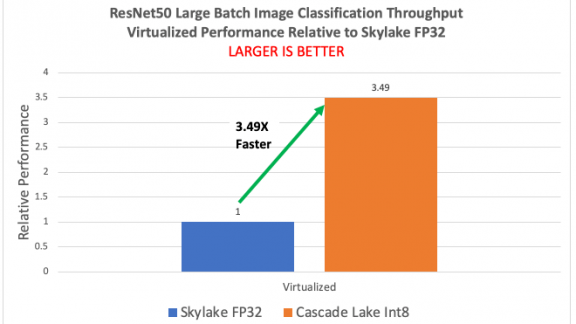

Por um escritor misterioso

Descrição

In this blog, we show the MLPerf Inference v3.0 test results for the VMware vSphere virtualization platform with NVIDIA H100 and A100-based vGPUs. Our tests show that when NVIDIA vGPUs are used in vSphere, the workload performance is the same as or better than it is when run on a bare metal system.

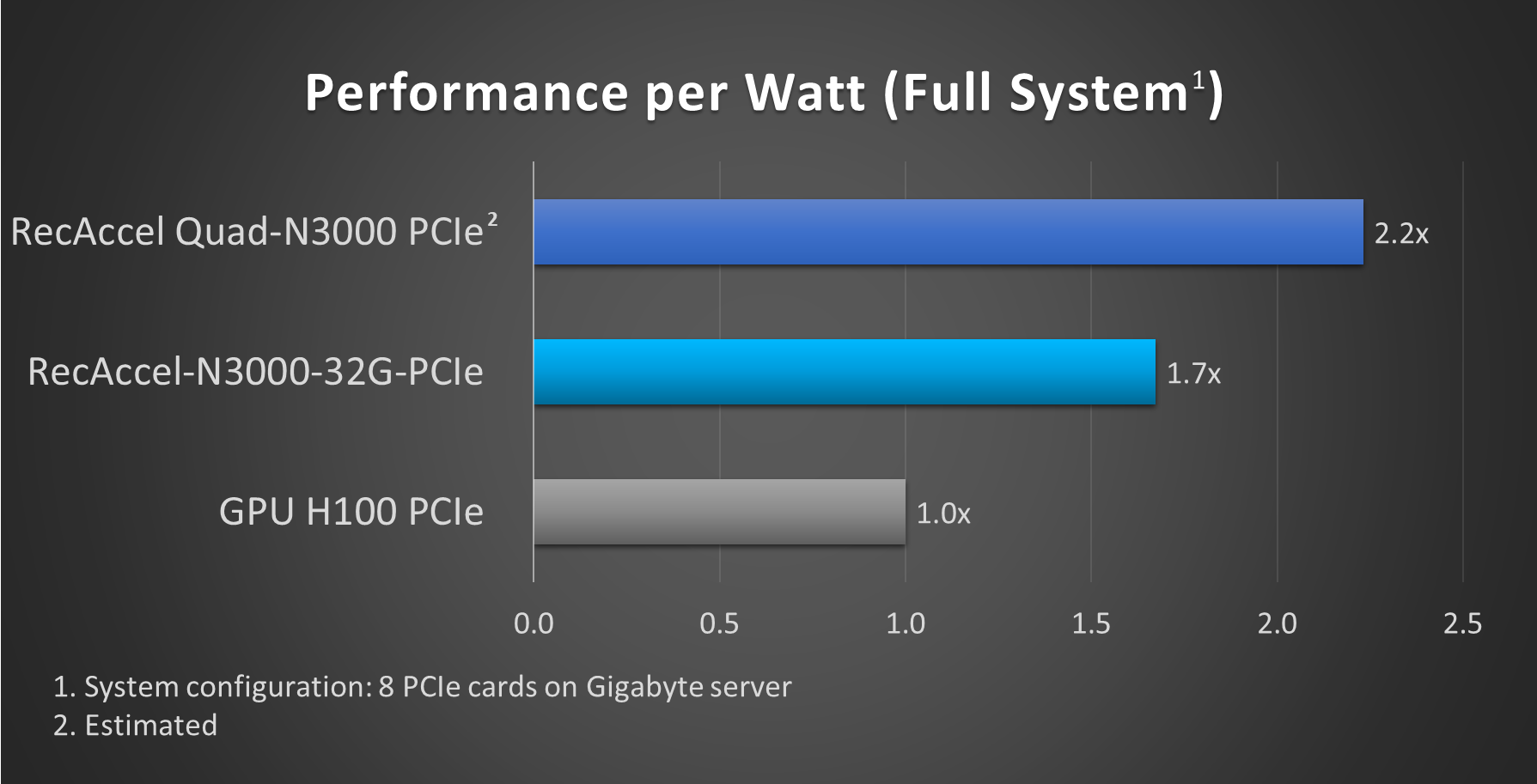

NEUCHIPS RecAccel N3000 Delivers Industry Leading Results for MLPerf v3.0 DLRM Inference Benchmarking

NVIDIA Posts Big AI Numbers In MLPerf Inference v3.1 Benchmarks With Hopper H100, GH200 Superchips & L4 GPUs

MLPerf Inference Virtualization in VMware vSphere Using NVIDIA vGPUs - VROOM! Performance Blog

No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

Setting New Records in MLPerf Inference v3.0 with Full-Stack Optimizations for AI

AI Archives - VROOM! Performance Blog

NVIDIA Hopper AI Inference Benchmarks in MLPerf Debut Sets World Record

vSphere Archives - VROOM! Performance Blog

Setting New Records in MLPerf Inference v3.0 with Full-Stack Optimizations for AI

Release Notes - NVIDIA Docs

Benchmarks Archives - VROOM! Performance Blog

Benchmarks Archives - VROOM! Performance Blog

NVIDIA Posts Big AI Numbers In MLPerf Inference v3.1 Benchmarks With Hopper H100, GH200 Superchips & L4 GPUs

de

por adulto (o preço varia de acordo com o tamanho do grupo)

.jpg)