8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Descrição

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

Introducing Neuropod, Uber ATG's Open Source Deep Learning

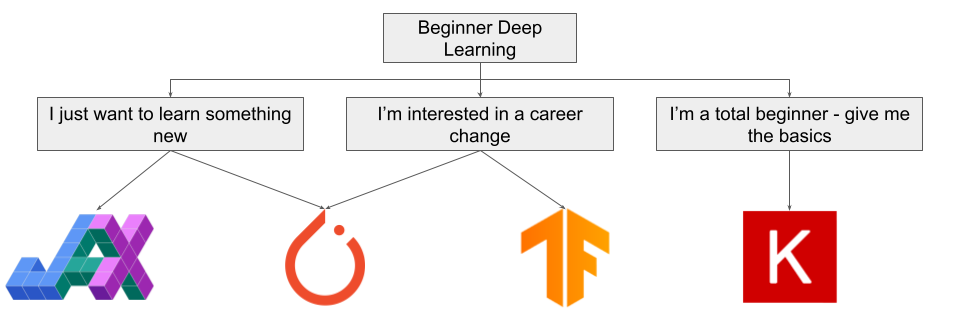

Why You Should (or Shouldn't) be Using Google's JAX in 2023

Grigory Sapunov on LinkedIn: Deep Learning with JAX

Tutorial 2 (JAX): Introduction to JAX+Flax — UvA DL Notebooks v1.2

Learn JAX in 2023: Part 2 - grad, jit, vmap, and pmap

Learning JAX in 2023: Part 1 — The Ultimate Guide to Accelerating

Breaking Up with NumPy: Why JAX is Your New Favorite Tool

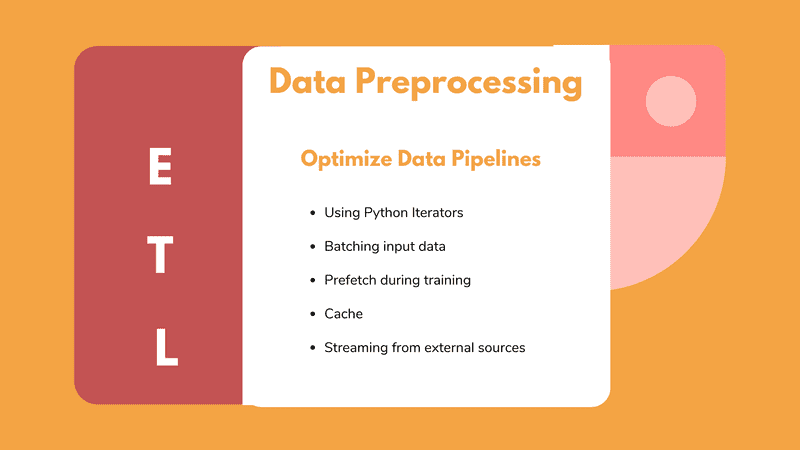

Data preprocessing for deep learning: Tips and tricks to optimize

Using JAX to accelerate our research - Google DeepMind

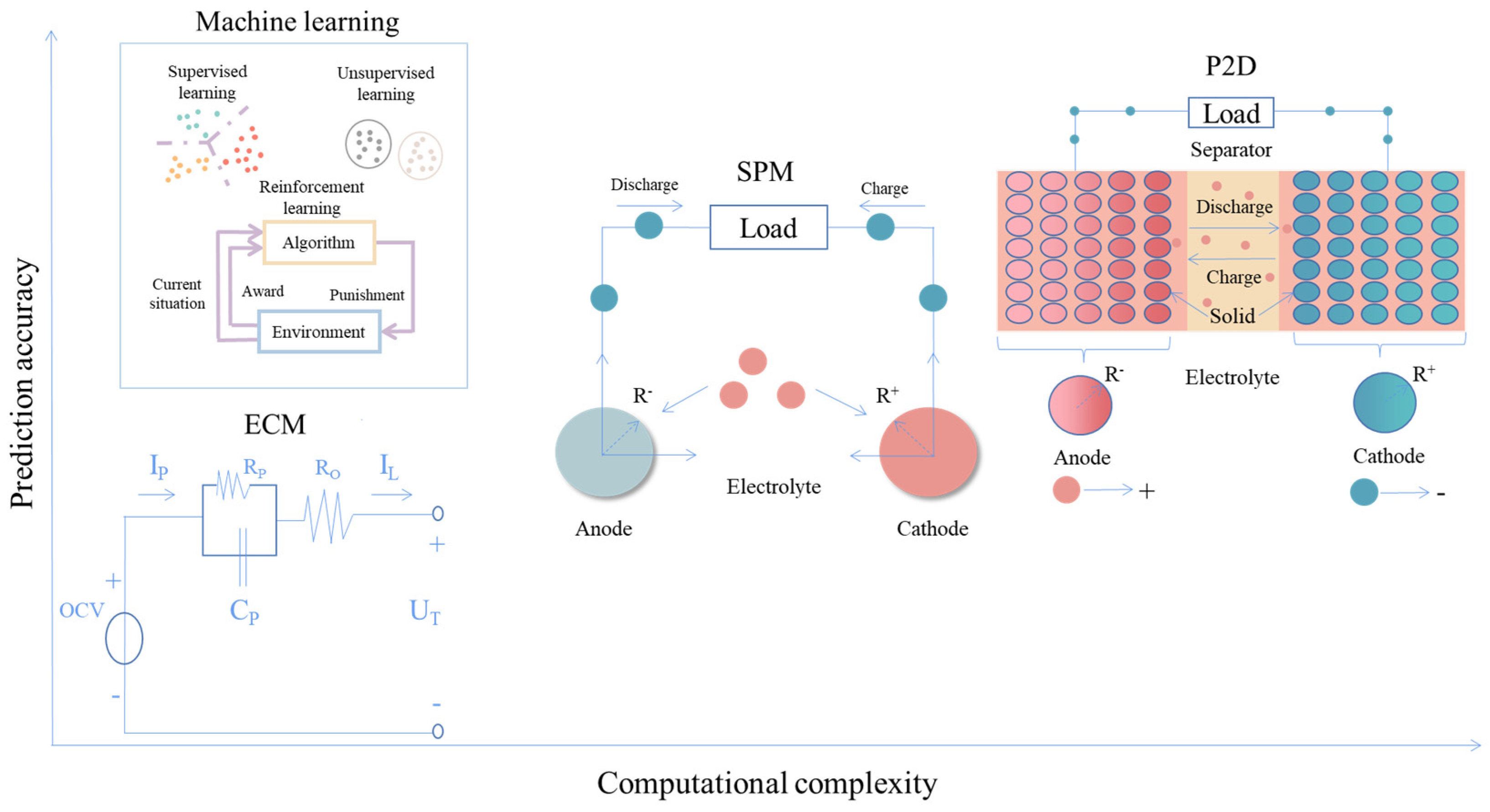

Energies, Free Full-Text

Exploring Quantum Machine Learning: Where Quantum Computing Meets

Tutorial 6 (JAX): Transformers and Multi-Head Attention — UvA DL

de

por adulto (o preço varia de acordo com o tamanho do grupo)

)